Job Manager Demo

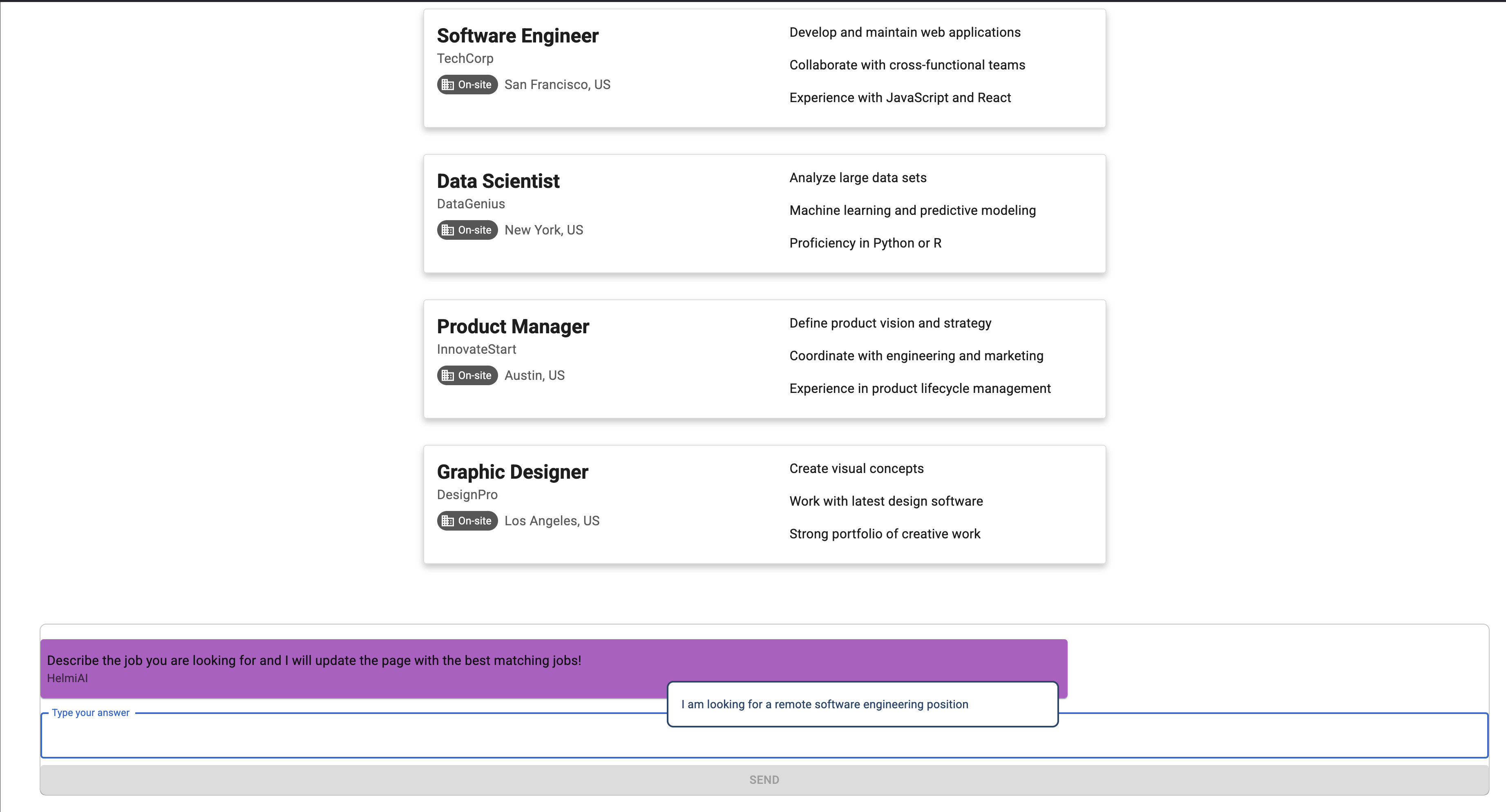

Embedded in this page is a demo of a job management application that I built with a friend engineer. Job Manager is a POC of a modern career services platform that helps job seekers find relevant opportunities through AI-powered matching. Users describe their preferences in natural language, and the system uses that to match them with job postings.

The interactive demo shows the core interaction where you chat with the agent to describe your background and the job you're looking for. The agent then updates the job listings to better match your JavaScript background and remote work preferences.

Architecture & Technology

The application is built with a Next.js frontend (TypeScript, Material-UI) and NestJS backend deployed using serverless AWS technologies including Lambda, S3, and CloudFront. The AI matching engine uses LangChain RAG with OpenAI GPT-4o-mini and vector search (OpenSearch) for semantic job discovery. Infrastructure is managed with OpenTofu across multi-account AWS (Development, Production, Administrative), with GitLab CI/CD pipelines using OIDC authentication.

Key insights gained

Getting to design and build a modern LLM chat driven user experience and application was the core motivation for the project. The end result proved the trasformative nature the LLMs offer in creating new user experiences. It's clear to me that text and other modalities like speech will replace buttons and mouse on certain use cases even in the near future. For a use case like job search where all job opportunities and people looking for jobs are unique, it's practically impossible to build a UI with just the right filters to suite everyone's - if not anyone's - needs.

The journey of designing and then building the application also proved me that I'm at my best when I own the whole stack from the backend to the - in this case imaginative - customer and have the freedom to prioritise features and mold the process that builds them. It also showed how drastic of an edge a duo of talented engineers have in terms of velocity over some more corporate (read bloated) team setups. The productivity gap also widened throughout the project as the rapid AI code assistant improvement shifted the emphasis more and more from building basic functionality into application specific design and problem soving.

I encourage the reader to try out the new ways of building experiences. While the usual "it's fun" and "you learn a ton" still hold; now it's also quick!

Key Features

Conversational job search with LLM-powered RAG

Automated periodically ran job scraping and indexing

SSO authentication (Google) and JWT

Vector-based semantic search

Serverless AWS with complete OpenTofu resource definitions

Gitlab CI/CD and multi-environment orchestration